1.12. Dislib tutorial

This tutorial will show the basics of using dislib.

Setup

First, we need to start an interactive PyCOMPSs session:

[1]:

import pycompss.interactive as ipycompss

import os

if 'BINDER_SERVICE_HOST' in os.environ:

ipycompss.start(graph=True,

project_xml='../xml/project.xml',

resources_xml='../xml/resources.xml')

else:

ipycompss.start(graph=True, monitor=1000)

******************************************************

*************** PyCOMPSs Interactive *****************

******************************************************

* .-~~-.--. _____ ________ *

* : ) |____ \ |____ / *

* .~ ~ -.\ /.- ~~ . ___) | / / *

* > `. .' < / ___/ / / *

* ( .- -. ) | |___ _ / / *

* `- -.-~ `- -' ~-.- -' |_____| |_| /__/ *

* ( : ) _ _ .-: *

* ~--. : .--~ .-~ .-~ } *

* ~-.-^-.-~ \_ .~ .-~ .~ *

* \ \ ' \ '_ _ -~ *

* \`.\`. // *

* . - ~ ~-.__\`.\`-.// *

* .-~ . - ~ }~ ~ ~-.~-. *

* .' .-~ .-~ :/~-.~-./: *

* /_~_ _ . - ~ ~-.~-._ *

* ~-.< *

******************************************************

* - Starting COMPSs runtime... *

* - Log path : /home/user/.COMPSs/Interactive_01/

* - PyCOMPSs Runtime started... Have fun! *

******************************************************

Next, we import dislib and we are all set to start working!

[2]:

import dislib as ds

Distributed arrays

The main data structure in dislib is the distributed array (or ds-array). These arrays are a distributed representation of a 2-dimensional array that can be operated as a regular Python object. Usually, rows in the array represent samples, while columns represent features.

To create a random array we can run the following NumPy-like command:

[3]:

x = ds.random_array(shape=(500, 500), block_size=(100, 100))

print(x.shape)

x

(500, 500)

[3]:

ds-array(blocks=(...), top_left_shape=(100, 100), reg_shape=(100, 100), shape=(500, 500), sparse=False)

Now x is a 500x500 ds-array of random numbers stored in blocks of 100x100 elements. Note that x is not stored in memory. Instead, random_array generates the contents of the array in tasks that are usually executed remotely. This allows the creation of really big arrays.

The content of x is a list of Futures that represent the actual data (wherever it is stored).

To see this, we can access the _blocks field of x:

[4]:

x._blocks[0][0]

[4]:

<pycompss.runtime.binding.Future at 0x7fb45006c4a8>

block_size is useful to control the granularity of dislib algorithms.

To retrieve the actual contents of x, we use collect, which synchronizes the data and returns the equivalent NumPy array:

[5]:

x.collect()

[5]:

array([[0.64524889, 0.69250058, 0.97374899, ..., 0.36928149, 0.75461806,

0.98784805],

[0.61753454, 0.092557 , 0.4967433 , ..., 0.87482708, 0.44454572,

0.0022951 ],

[0.78473344, 0.15288068, 0.70022708, ..., 0.82488172, 0.16980005,

0.30608108],

...,

[0.16257112, 0.94326181, 0.26206143, ..., 0.49725598, 0.80564738,

0.69616444],

[0.25089352, 0.10652958, 0.79657793, ..., 0.86936011, 0.67382938,

0.78140887],

[0.17716041, 0.24354163, 0.52866266, ..., 0.12053584, 0.9071268 ,

0.55058659]])

Another way of creating ds-arrays is using array-like structures like NumPy arrays or lists:

[6]:

x1 = ds.array([[1, 2, 3], [4, 5, 6]], block_size=(1, 3))

x1

[6]:

ds-array(blocks=(...), top_left_shape=(1, 3), reg_shape=(1, 3), shape=(2, 3), sparse=False)

Distributed arrays can also store sparse data in CSR format:

[7]:

from scipy.sparse import csr_matrix

sp = csr_matrix([[0, 0, 1], [1, 0, 1]])

x_sp = ds.array(sp, block_size=(1, 3))

x_sp

[7]:

ds-array(blocks=(...), top_left_shape=(1, 3), reg_shape=(1, 3), shape=(2, 3), sparse=True)

In this case, collect returns a CSR matrix as well:

[8]:

x_sp.collect()

[8]:

<2x3 sparse matrix of type '<class 'numpy.int64'>'

with 3 stored elements in Compressed Sparse Row format>

Loading data

A typical way of creating ds-arrays is to load data from disk. Dislib currently supports reading data in CSV and SVMLight formats like this:

[9]:

x, y = ds.load_svmlight_file("./files/libsvm/1", block_size=(20, 100), n_features=780, store_sparse=True)

print(x)

csv = ds.load_txt_file("./files/csv/1", block_size=(500, 122))

print(csv)

ds-array(blocks=(...), top_left_shape=(20, 100), reg_shape=(20, 100), shape=(61, 780), sparse=True)

ds-array(blocks=(...), top_left_shape=(500, 122), reg_shape=(500, 122), shape=(4235, 122), sparse=False)

Slicing

Similar to NumPy, ds-arrays support the following types of slicing:

(Note that slicing a ds-array creates a new ds-array)

[10]:

x = ds.random_array((50, 50), (10, 10))

Get a single row:

[11]:

x[4]

[11]:

ds-array(blocks=(...), top_left_shape=(10, 10), reg_shape=(10, 10), shape=(1, 50), sparse=False)

Get a single element:

[12]:

x[2, 3]

[12]:

ds-array(blocks=(...), top_left_shape=(1, 1), reg_shape=(1, 1), shape=(1, 1), sparse=False)

Get a set of rows or a set of columns:

[13]:

# Consecutive rows

print(x[10:20])

# Consecutive columns

print(x[:, 10:20])

# Non consecutive rows

print(x[[3, 7, 22]])

# Non consecutive columns

print(x[:, [5, 9, 48]])

ds-array(blocks=(...), top_left_shape=(10, 10), reg_shape=(10, 10), shape=(10, 50), sparse=False)

ds-array(blocks=(...), top_left_shape=(10, 10), reg_shape=(10, 10), shape=(50, 10), sparse=False)

ds-array(blocks=(...), top_left_shape=(10, 10), reg_shape=(10, 10), shape=(3, 50), sparse=False)

ds-array(blocks=(...), top_left_shape=(10, 10), reg_shape=(10, 10), shape=(50, 3), sparse=False)

Get any set of elements:

[14]:

x[0:5, 40:45]

[14]:

ds-array(blocks=(...), top_left_shape=(10, 10), reg_shape=(10, 10), shape=(5, 5), sparse=False)

Other functions

Apart from this, ds-arrays also provide other useful operations like transpose and mean:

[15]:

x.mean(axis=0).collect()

[15]:

array([0.551327 , 0.49044018, 0.47707326, 0.505077 , 0.48609203,

0.5046837 , 0.48576743, 0.50085461, 0.59721606, 0.52625186,

0.54670041, 0.51213254, 0.4790549 , 0.56172745, 0.54560812,

0.52904012, 0.54524971, 0.52370167, 0.44268389, 0.58325103,

0.4754059 , 0.4990062 , 0.55135695, 0.53576298, 0.44728801,

0.57918722, 0.46289081, 0.48786927, 0.46635653, 0.53229369,

0.50648353, 0.53486796, 0.5159688 , 0.50703502, 0.46818427,

0.59886016, 0.48181209, 0.51001161, 0.46914881, 0.57437929,

0.52491673, 0.49711231, 0.50817903, 0.50102322, 0.42250693,

0.51456321, 0.53393824, 0.47924624, 0.49860827, 0.49424366])

[16]:

x.transpose().collect()

[16]:

array([[0.75899854, 0.83108977, 0.28083382, ..., 0.65348721, 0.10747938,

0.13453309],

[0.70515755, 0.22656129, 0.60863163, ..., 0.10640133, 0.3311688 ,

0.50884584],

[0.71224037, 0.95907871, 0.6010006 , ..., 0.41099068, 0.5671029 ,

0.06170055],

...,

[0.39789773, 0.69988175, 0.93784369, ..., 0.24439267, 0.45685381,

0.93017544],

[0.22410234, 0.13491992, 0.75906239, ..., 0.96917569, 0.96204333,

0.14629864],

[0.81496796, 0.96925576, 0.58510411, ..., 0.65520011, 0.05744591,

0.78974985]])

Machine learning with dislib

Dislib provides an estimator-based API very similar to scikit-learn. To run an algorithm, we first create an estimator. For example, a K-means estimator:

[17]:

from dislib.cluster import KMeans

km = KMeans(n_clusters=3)

Now, we create a ds-array with some blob data, and fit the estimator:

[18]:

from sklearn.datasets import make_blobs

# create ds-array

x, y = make_blobs(n_samples=1500)

x_ds = ds.array(x, block_size=(500, 2))

km.fit(x_ds)

[18]:

KMeans(arity=50, init='random', max_iter=10, n_clusters=3,

random_state=<mtrand.RandomState object at 0x7fb51401f9d8>, tol=0.0001,

verbose=False)

Finally, we can make predictions on new (or the same) data:

[19]:

y_pred = km.predict(x_ds)

y_pred

[19]:

ds-array(blocks=(...), top_left_shape=(500, 1), reg_shape=(500, 1), shape=(1500, 1), sparse=False)

y_pred is a ds-array of predicted labels for x_ds

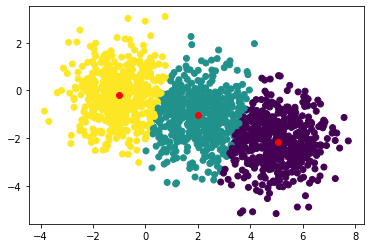

Let’s plot the results

[20]:

%matplotlib inline

import matplotlib.pyplot as plt

centers = km.centers

# set the color of each sample to the predicted label

plt.scatter(x[:, 0], x[:, 1], c=y_pred.collect())

# plot the computed centers in red

plt.scatter(centers[:, 0], centers[:, 1], c='red')

[20]:

<matplotlib.collections.PathCollection at 0x7fb42b4fc518>

Note that we need to call y_pred.collect() to retrieve the actual labels and plot them. The rest is the same as if we were using scikit-learn.

Now let’s try a more complex example that uses some preprocessing tools.

First, we load a classification data set from scikit-learn into ds-arrays.

Note that this step is only necessary for demonstration purposes. Ideally, your data should be already loaded in ds-arrays.

[21]:

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

x, y = load_breast_cancer(return_X_y=True)

x_train, x_test, y_train, y_test = train_test_split(x, y)

x_train = ds.array(x_train, block_size=(100, 10))

y_train = ds.array(y_train.reshape(-1, 1), block_size=(100, 1))

x_test = ds.array(x_test, block_size=(100, 10))

y_test = ds.array(y_test.reshape(-1, 1), block_size=(100, 1))

Next, we can see how support vector machines perform in classifying the data. We first fit the model (ignore any warnings in this step):

[22]:

from dislib.classification import CascadeSVM

csvm = CascadeSVM()

csvm.fit(x_train, y_train)

/usr/lib/python3.6/site-packages/dislib-0.4.0-py3.6.egg/dislib/classification/csvm/base.py:374: RuntimeWarning: overflow encountered in exp

k = np.exp(k)

/usr/lib/python3.6/site-packages/dislib-0.4.0-py3.6.egg/dislib/classification/csvm/base.py:342: RuntimeWarning: invalid value encountered in double_scalars

delta = np.abs((w - self._last_w) / self._last_w)

[22]:

CascadeSVM(c=1, cascade_arity=2, check_convergence=True, gamma='auto',

kernel='rbf', max_iter=5, random_state=None, tol=0.001,

verbose=False)

and now we can make predictions on new data using csvm.predict(), or we can get the model accuracy on the test set with:

[23]:

score = csvm.score(x_test, y_test)

score represents the classifier accuracy, however, it is returned as a Future. We need to synchronize to get the actual value:

[24]:

from pycompss.api.api import compss_wait_on

print(compss_wait_on(score))

0.5944055944055944

The accuracy should be around 0.6, which is not very good. We can scale the data before classification to improve accuracy. This can be achieved using dislib’s StandardScaler.

The StandardScaler provides the same API as other estimators. In this case, however, instead of making predictions on new data, we transform it:

[25]:

from dislib.preprocessing import StandardScaler

sc = StandardScaler()

# fit the scaler with train data and transform it

scaled_train = sc.fit_transform(x_train)

# transform test data

scaled_test = sc.transform(x_test)

Now scaled_train and scaled_test are the scaled samples. Let’s see how SVM perfroms now.

[26]:

csvm.fit(scaled_train, y_train)

score = csvm.score(scaled_test, y_test)

print(compss_wait_on(score))

0.972027972027972

The new accuracy should be around 0.9, which is a great improvement!

Close the session

To finish the session, we need to stop PyCOMPSs:

[27]:

ipycompss.stop()

****************************************************

*************** STOPPING PyCOMPSs ******************

****************************************************

Warning: some of the variables used with PyCOMPSs may

have not been brought to the master.

****************************************************